Insider threats have always been a concern for security teams, but SaaS has fundamentally changed how they manifest (and how difficult they are to prevent).

In modern organizations, the most significant insider risk comes from employees, contractors, and users who already have legitimate access to sensitive data. Sometimes that access is misused unintentionally.

Other times, it’s abused deliberately - during role changes, performance issues, or when someone is preparing to leave the company and take data, intellectual property, or customer information with them.

What makes SaaS environments uniquely challenging is that this misuse often happens inside normal workflows. In tools like Google Workspace and Slack, sensitive data is routinely accessed, shared, downloaded, and discussed as part of everyday work.

Files are shared by Google Drive links. Sensitive conversations and intel are echanged via Slack channels. Integrations and OAuth apps quietly expand who (and what) can touch critical data.

As a result, insider threats don’t always look like obvious attacks. They look like trusted users doing things they are technically allowed to do, just in ways that put the organization at risk.

Traditional insider threat prevention approaches weren’t designed for this reality. Annual access reviews, static DLP rules, and alert-heavy monitoring struggle to keep up with continuously changing permissions, collaboration-driven data movement, and users who already know where valuable information lives.

Effective insider threat prevention in SaaS requires a more dynamic approach - one that assumes trusted access will be misused, intentionally or not.

That means reducing the blast radius of access, protecting sensitive data where collaboration happens, and automatically remediating risky behavior before it turns into a security incident.

This guide outlines 20 practical, SaaS-native best practices for preventing insider threats in Google Workspace and Slack, with a focus on detecting misuse of legitimate access and reducing insider risk in real time - not just after damage has been done.

Insider Threat vs. Insider Risk (And Why the Difference Matters)

Insider threat prevention often breaks down because teams focus too narrowly on individual incidents instead of the conditions that make those incidents possible.

An insider threat is a specific event: a departing employee exporting sensitive data, a user sharing intellectual property externally, or a trusted account abusing access to customer information.

An insider risk, by contrast, is the ongoing exposure created by who has access to what, how that access is used, and how easily sensitive data can move through your SaaS environment.

The distinction matters because insider threats - especially malicious ones - rarely appear out of nowhere. They are enabled by accumulated insider risk: excessive permissions, broad sharing defaults, poor visibility into user activity, and limited ability to intervene and remediate exposure before data leaves the organization.

In SaaS platforms like Google Workspace and Slack, insider risk builds up quietly:

- Users retain access to data long after roles change

- Sensitive data spreads across shared drives, channels, and conversations

- Employees share data and files to personal accounts and are never caught

- OAuth apps and integrations extend access beyond direct human users

- LLMs like Gemini or Copilot shine a magnifying glass and make it easy for employees to find sensitive data that they otherwise would never know they had access to

These conditions affect all insider scenarios - negligent, compromised, and malicious alike. A careless mistake and an intentional data grab often use the same mechanisms: legitimate access paths that were never meant to support high-risk behavior.

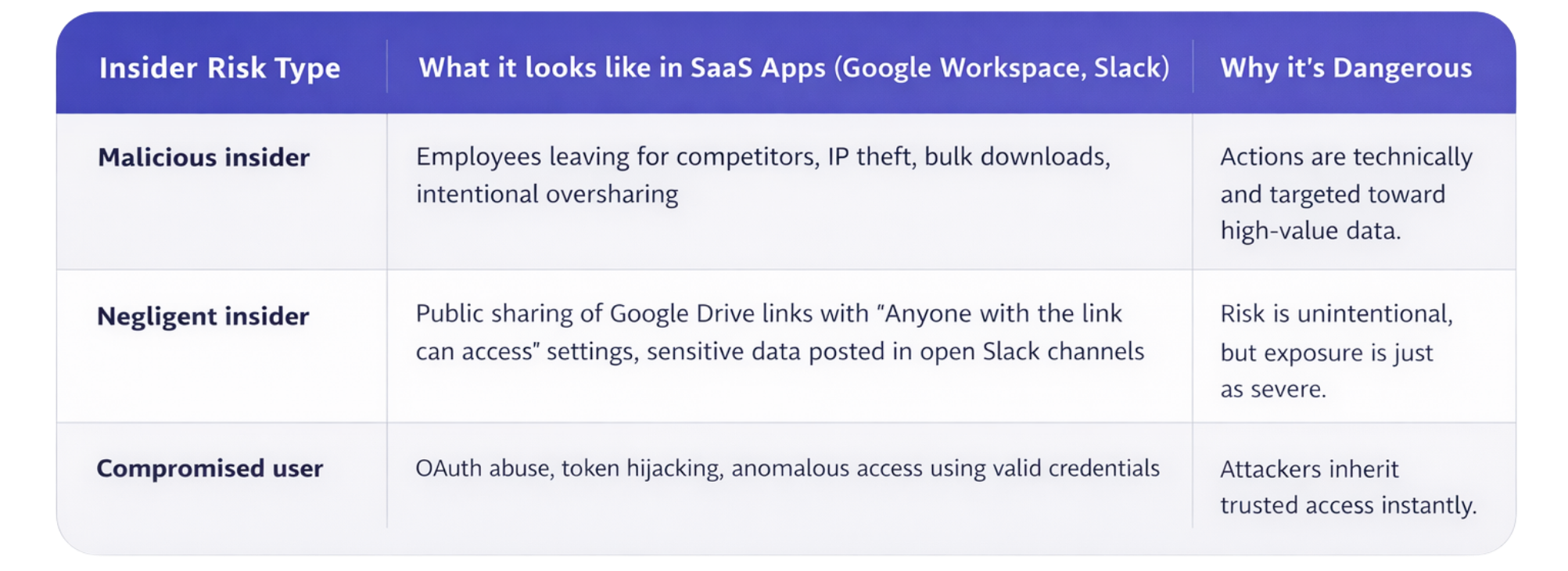

The three insider risk categories in SaaS environments

While intent varies, insider threats in SaaS typically fall into one of three categories: malicious insiders, negligent insiders, and compromised users. Each one represents misuse of legitimate access, and each requires proactive controls to prevent serious damage.

The common thread across all three is not intent: it’s trust. Insider threats succeed because users already know where sensitive data lives and already have the permissions needed to move it.

That’s why effective insider threat prevention requires continuously reducing insider risk by tightening access, protecting sensitive data in collaboration tools, monitoring behavior in context, and remediating risky conditions before they’re exploited.

The Modern SaaS Insider Threat Model in 2026

Preventing insider threats in SaaS starts with a clear mental model of how risk is created - and where security controls actually make a difference.

In SaaS tools like Google Workspace and Slack, insider threats don’t emerge from a single bad decision. They emerge from the interaction of four factors that compound over time: data, access, activity, and response.

When any one of these four areas is poorly managed, the likelihood of insider misuse (intentional or not) increases dramatically.

The four layers of insider risk in SaaS

1. Data: What is sensitive, and where does it live?

Insider threat prevention is impossible if you don’t know what you’re trying to protect.

In SaaS environments, sensitive data isn’t confined to a single system. It lives in Google Drive folders, shared documents, spreadsheets, Slack messages, threads, and file uploads.

Intellectual property, customer data, credentials, and internal strategy documents often coexist alongside everyday collaboration content - frequently without clear boundaries.

When sensitive data isn’t clearly identified or classified, users are free to move it in ways that feel harmless but create real risk: sharing it by links, copying into Slack channels, pasting it into internal LLMs, or even downloading it at the end point.

2. Access: Who can reach this sensitive data?

Access is the most underestimated driver of insider risk.

In theory, permissions are assigned intentionally. In practice, they expand continuously and people that shouldnt have access, get it.

Employees change roles and switch departments. Contractors stay shared on files after the relationship ends. Shared drives accumulate members. Slack channels grow without owners. OAuth apps and bots gain broad scopes and are rarely revisited.

For malicious insiders, this overexposure is an opportunity. For negligent insiders, it’s an accident waiting to happen.

The more people (and even non-human identities) that can access sensitive data, the easier it is for that data to be misused, exfiltrated, or shared outside the organization using entirely legitimate mechanisms.

Insider threats don’t require bypassing controls. They exploit permissive ones.

3. Activity: How is access being used?

Access alone doesn’t = risk…behavior does!

In SaaS platforms, most insider activity happens through actions that are explicitly allowed:

- Sharing files

- Sending documents to personal domains

- Exporting spreadsheets

- Pasting IP into Slack messages

- Installing integrations

What distinguishes normal collaboration from insider risk is context: timing, volume, sensitivity, and deviation from baseline behavior.

A file share to an auditor that uses a personal email during tax season is routine. Hundreds of sensitive files downloaded shortly before an employee departure is not.

Without visibility into user behavior - especially when it involves sensitive data - security teams are left reacting to incidents instead of identifying and remediating risk patterns early.

4. Remediation: What happens when risk appears?

This is where many insider threat programs fall apart.

Detection without action doesn’t reduce risk. Alerts that require manual investigation slow responses and actually allow exposure to continue. In high-velocity SaaS environments, insider threats move faster than human workflows 100% of the time.

Effective insider threat prevention requires remediation built into the model, not bolted on afterward. That means the ability to:

- Revoke public links

- Remove external collaborators

- Downgrade excessive permissions

- Revoke OAuth grants

- Contain data movement immediately

The goal isn’t just to see insider risk (thats useless…) - it’s to neutralize it before damage occurs.

Why this model matters

This four-layer model reflects how insider threats actually operate in SaaS environments:

- Sensitive data exists everywhere

- Access is broad and constantly changing

- Risky activity often looks legitimate (until it’s not…)

- Response speed determines impact

Throughout the rest of this guide, each of the 20 best practices maps back to one or more of these layers. Some reduce exposure. Others prevent misuse. Others focus on early detection or fast remediation.

Together, they form a practical, defensible approach to insider threat prevention - one designed for organizations where collaboration is essential, trust is unavoidable, and misuse of access is a risk that must be actively managed.

Insider Threat Prevention: 20 Best Practices to Implement

1. Treat identity controls as accountability controls, not just access controls

In SaaS environments, insider risk isn’t about whether users can log in - it’s about whether their actions can be clearly attributed, analyzed in context, and acted on. Identity controls should support investigation and deterrence, not just authentication.

- Centralize identity across all SaaS applications

- Eliminate orphaned or ambiguous accounts

- Strengthen authentication for high-impact actions

Key takeaway: Insider risk thrives when accountability is unclear.

2. Continuously clean up over-permissioned users

Permissions in SaaS expand quietly over time, especially in shared drives and Slack channels. This creates invisible insider risk long before misuse occurs.

- Regularly reduce and audit broad group and workspace access

- Limit default access inheritance

- Prioritize least privilege in collaborative spaces like Workspace

Key takeaway: Over-permissioned access enables most insider incidents - even if intentions are good.

3. Eliminate access inheritance in shared drives and channels

Inherited access makes it nearly impossible to understand who can reach sensitive data, for how long they’ve had it, and why. In SaaS, this is one of the most common precursors to insider misuse.

- Break inheritance for high-risk data locations

- Assign explicit owners to shared resources

- Review inherited access as a risk signal

Key takeaway: If access isn’t intentional, it’s a liability.

4. Review and reduce access before role changes and departures

Insider risk spikes during transitions, especially when employees try to take company IP with them to their new role. Waiting until offboarding is too late - as most exfiltration attempts happen in the weeks leading up to official offboarding.

- Trigger access reviews ahead of role changes

- Reduce access to sensitive data early

- Monitor bulk actions during transition periods

Key takeaway: Timing matters more than intent in insider prevention.

5. Treat bots, OAuth apps, and service accounts as actual insider identities

Non-human identities often have broader access than users - and far less oversight. Non-human identities need to be treated with the same amount of rigor and oversight as true human identities.

- Inventory all integrations and app permissions

- Limit permission scopes to minimum required access

- Review non-human access continuously

Key takeaway: Non-human identities are just as risky as human users.

6. Clean up access before enabling GenAI and in-app LLM features

This one is huge, and just started becoming a consideration for security teams. When companies enable usage of Gemini or other AI models, LLMs surface data based on existing permissions, amplifying any existing access mistakes!

- Reduce over-permissioned users first BEFORE company wide LLM implementation

- Audit sensitive data exposure paths BEFORE AI adoption

- Treat AI enablement as a security milestone and a compliance check

Key takeaway: GenAI magnifies existing insider risk, so make sure permissions are cleaned up before wide-spread adoption

7. Secure sharing workflows, not just files

In SaaS, data exposure happens through sharing, not storage.

- Focus controls on how data is shared, with whom, and for what reasons

- Govern who can share externally and how they’re sharing

- Monitor changes to sharing posture and take action if risky shares occur

Key takeaway: Data rarely leaks where it’s stored, it leaks when it’s shared.

8. Control link-based access as aggressively as direct user access

Public and unrestricted links bypass traditional access controls entirely.

- Limit public link creation by default

- Enforce expiration and scope restrictions

- Monitor link usage, not just creation

Key takeaway: Links are identities without accountability.

9. Treat Slack messages and threads as data exfiltration paths

Chat platforms are now primary data movement channels - especially in Slack, where most users don’t auto-delete sensitive data after they send it.

- Apply data sensitivity awareness to messages

- Monitor high-risk channels and conversations

- Reduce assumptions about “internal” safety

Key takeaway: Chat platforms and SaaS apps are a data plane, not just a means for communication.

10. Detect data replication, not just data access

Insider risk increases when sensitive data spreads across locations.

- Track copying, syncing, and re-sharing patterns

- Identify uncontrolled data proliferation

- Reduce duplicate exposure points

Key takeaway: The more places data lives, the harder it is to protect (and remediate!)

11. Constrain bulk actions by context, not just permission

Bulk downloads and exports are high-risk regardless of authorization.

- Apply contextual checks to bulk actions

- Monitor timing, volume, and sensitivity together

- Treat mass activity as a risk multiplier

Key takeaway: Scale turns normal actions into insider threats.

12. Apply sensitivity controls at the moment of collaboration

Preventing insider risk requires intervention during work, not after.

- Enforce controls when data is shared or posted in motion

- Provide guardrails at the point of action

- Reduce reliance on after-the-fact reviews

Key takeaway: Latency expands the attack surface, and prevention only works when it’s timely.

13. Detect intent signals in context, not isolated events

Single actions rarely indicate insider risk. Patterns do. Especially when context on the user (role, scope, tenure, etc.) can be tied to the actions theyre taking.

- Correlate behavior over time and continuously compare to behavioral baselines

- Analyze what that user is doing and whether it makes sense within the context of their role and usual responsibilities

- Focus on trajectories and continuous actions, not just snapshots that are point-in-time

Key takeaway: Insider risk reveals itself in sequences and context.

14. Correlate access, data sensitivity, and timing

Again, context separates normal work from dangerous behavior. An action may look totaly normal and business-as-usual, but layering context to it can show that its actually risky and shouldn’t be happeneing when taking other factors into account.

- Integrate with an HRIS or IdP system to gather data on the users role, tenure, department, scope, and other useful insights

- Combine the who, what, and when signals and tie them to the actions they’re taking in SaaS

- Prioritize events near departures or role changes

Key takeaway: Context turns noise and every-day actions + alerts into clear, actionable insight and risk modeling.

15. Suppress alerts that don’t lead to action

Alert fatigue is an insider risk in itself. When security teams are bogged down with chasing useless alerts, the real ones that demand attention slip through the cracks.

- Eliminate indeed based alerts that don’t have clear outcomes

- Prioritize signals tied to remediation

- Reduce volume of mindless alerts to increase response quality

Key takeaway: An alert without action is just noise.

16. Escalate risk only when remediation is possible

Not every risk requires immediate escalation. And, unfortunately, many teams are still dealing with manual remediation tactics (which is completely unreliable and unscalable, but more on that later…) For this reason, SecOps teams need to be picky about what risks they pay attention to.

- Gate identity alerts by response readiness

- Align risk severity with the available controls

- Avoid creating cases you can’t resolve

Key takeaway: Escalation should follow capability and remediation that is possible.

17. Tie every alert to a pre-approved response path

Response hesitation allows insider risk to persist.

- Define actions before incidents occur

- Pre-approve low-risk corrective steps

- Reduce decision friction during response

Key takeaway: Speed matters more than perfection.

18. Automate the safest corrective action first

Most insider incidents don’t require drastic response, just a simple way to contain and eliminate the threat.

- Start with reversible, low-impact actions

- Contain exposure before investigating intent

- Preserve business continuity

Key takeaway: Small actions can prevent big losses.

19. Align insider response with HR and legal realities

Insider risk is as much a people issue as a technical one.

- Coordinate response thresholds in advance

- Respect privacy and employment considerations

- Avoid over-correcting without evidence

Key takeaway: Insider response must balance security and trust.

20. Measure insider risk by exposure reduced - not alerts generated

Success isn’t visibility; it’s risk reduction. Security leaders must report on insider risk by taking an outcomes based approach - as with anything they report on.

- Track reduced access, sharing, and exposure

- Measure remediation speed and impact

- Focus on outcomes, not general activity

Key takeaway: Fewer alerts mean nothing if risk stays the same.

Why Automation Is the Missing Layer in Modern Insider Threat Prevention

All the best practices outlined above are achievable, and many security teams are already attempting some version of it today. But, for organizations still managing insider threat prevention and SaaS DLP manually, there’s an important reality worth acknowledging:

Manual processes don’t fail because teams lack expertise, they fail because SaaS environments move faster than humans can keep up.

In Google Workspace and Slack, access changes daily. Data is shared constantly. New integrations appear without notice. Users collaborate in ways that weren’t anticipated when the original policies were written.

Insider risk doesn’t emerge in neat, reviewable moments - it accumulates continuously.

That’s where the gap between best practices and actual outcomes begins.

Detection alone isn’t enough

Many organizations start with insider threat detection: logs, alerts, and dashboards that simply surface risky behavior. While detection and visibility are obviously necessary, it’s not sufficient without a way to actually remediate what you’re actually detecting.

Alerts without timely action allow exposure to persist and gaps to widen, leaving security teams to quickly become overwhelmed by volume rather than empowered by insight.

Without the ability to intervene directly, detection becomes a reporting exercise instead of a prevention strategy.

Data access governance must be continuous

Static access reviews and periodic audits don’t keep pace with modern collaboration. In SaaS, permissions drift constantly through shared drives, Slack channels, group membership changes, and OAuth apps.

Effective data access governance requires continuous enforcement - not a quarterly cleanup. That means identifying over-permissioned users as risk forms, not months after data has already spread.

DLP must operate where work actually happens

Traditional DLP was built around files and endpoints, but modern SaaS DLP must operate inside collaboration workflows where data is copied, pasted, shared, and discussed in real time.

Preventing insider risk requires sensitivity awareness at the moment of collaboration, not retrospective discovery after data has left its intended boundary.

Remediation is where prevention actually happens

This is the most common breaking point. And, what MOST companies STILL don’t have.

Security teams can detect risk. They can even agree on the right response. But when remediation depends on manual investigation, ticketing systems, and cross-team coordination… exposure continues longer than it should.

Automated remediation doesn’t replace human judgment - it handles the obvious, repeatable, low-risk actions that don’t need debate:

- Revoking public links

- Removing unnecessary external access

- Downgrading excessive permissions

- Containing sensitive data movement

These actions are safe, reversible, and effective - and they dramatically reduce insider risk when applied consistently.

A SaaS security posture fit for 2026

As SaaS adoption deepens and GenAI accelerates data exposure, insider threat prevention will no longer be sustainable without automation.

A modern SaaS security posture requires four capabilities working together:

- Insider threat detection to surface risky behavior early

- Data access governance to limit unnecessary exposure

- SaaS-native DLP to protect sensitive data in collaboration tools

- Workflow-based remediation to reduce risk in real time

Automation is what connects these layers into a system that scales.

DoControl was built for organizations that recognize this shift - teams that want to move beyond manual cleanup and alert-driven fatigue toward consistent, preventative control over insider risk across SaaS.

Key Takeaways for Insider Threat Prevention in SaaS

1. Insider threats are a trusted-access problem: The most serious SaaS risks come from employees and users who already know where sensitive data lives and already have permission to use it.

2. SaaS changes how insider risk forms: Collaboration tools, link-based sharing, Oauth integrations, and GenAI amplify both intentional and unintentional misuse of data.

3. Permissions drift is the silent enabler: Over time, access expands faster than it’s manually reviewed - creating exposure long before any malicious action occurs.

4. Context matters more than intent: The same action can be harmless or dangerous depending on timing, sensitivity, and access scope.

5. Effective programs prioritize outcomes over noise: Fewer alerts, faster remediation, and reduced exposure are better indicators of success than raw visibility.

6. Detection alone is not prevention: Insider risk is only reduced when risky conditions are actively remediated, not just observed.

Conclusion

Insider threat prevention has evolved, and SaaS has forced the change.

In environments like Google Workspace and Slack, insider risk doesn’t announce itself as an attack. It builds quietly through permissive access, invisible data propagation, and workflows that prioritize collaboration over control. When misuse happens (whether accidental or intentional) it often relies on entirely legitimate actions.

That’s why modern insider threat prevention can’t rely on legacy models built around perimeter defenses or post-incident investigations. It requires a SaaS-native approach that assumes trusted access will be misused at some point and focuses on limiting impact before that misuse occurs.

The most effective programs don’t just detect insider threats. They:

- Reduce unnecessary access

- Protect sensitive data where collaboration happens

- Identify risky behavior early

- Remediate exposure automatically and consistently

When insider threat prevention is treated as an ongoing discipline - not a reactive process - organizations can enable productivity without accepting unnecessary risk.

The goal isn’t to eliminate trust between your employees operating in SaaS.

It’s to make sure trust doesn’t become your greatest liability.

FAQ: Insider Threat Prevention in SaaS

What is insider threat prevention?

Insider threat prevention is the practice of reducing risk from employees, contractors, and users who already have legitimate access to systems and data. In SaaS environments, it focuses on limiting over-permissioned access, protecting sensitive data during collaboration, and remediating risky behavior before data is exposed.

What’s the difference between insider threat and insider risk?

An insider threat is a specific incident, such as data theft or unauthorized sharing. Insider risk refers to the underlying conditions — excessive access, broad sharing, lack of visibility — that make those incidents possible. Effective prevention focuses on reducing risk, not just responding to threats.

Why are insider threats harder to detect in SaaS?

In SaaS platforms, insider actions often look like normal work. Downloading files, sharing links, posting messages, or exporting data are all legitimate actions. Without context around sensitivity, timing, and behavior patterns, risky activity blends into everyday collaboration.

How do insider threats commonly occur in Google Workspace?

Common scenarios include public or unrestricted Drive links, over-shared folders, bulk exports from Sheets, excessive access through groups, and misuse during employee departures or role changes. These actions typically use valid permissions rather than exploits.

How do insider threats occur in Slack?

Slack insider risk often involves sensitive data shared in public channels, copied into messages or threads, accessed by external collaborators, or exposed through overly permissive bots and integrations. Slack is a primary data movement channel, not just a messaging tool.

Are insider threats usually malicious?

Insider threats can be malicious, negligent, or the result of compromised accounts. All three are dangerous because they rely on trusted access. Malicious insiders may intentionally take IP or customer data, while negligent actions can create equally severe exposure.

What’s the most effective way to prevent insider threats in SaaS?

The most effective approach combines:

- Continuous access and permission management

- SaaS-native data protection

- Behavioral context and intent signals

- Automated remediation of risky conditions

Prevention works best when it reduces exposure before misuse occurs.

How should organizations measure insider threat prevention success?

Success should be measured by risk reduction, not alert volume. Useful metrics include reduced over-permissioned access, fewer public links, lower external sharing, faster remediation times, and decreased sensitive data exposure across SaaS platforms.

.png)